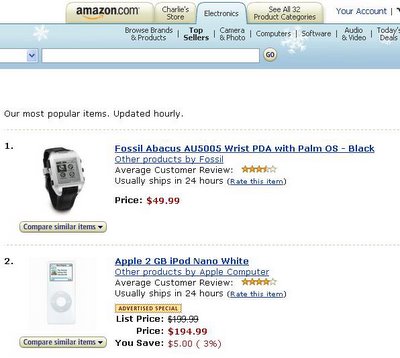

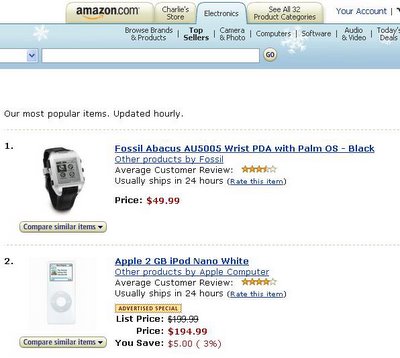

The image above was sent to me today by a former PalmSource colleague. Yes, that's a list of Amazon's best-selling consumer electronics products.

And yes, that's the Fossil Palm OS watch at #1, outselling the iPod Nano.

The Fossil saga is one of the saddest stories in the licensing of Palm OS. Fossil had terrible manufacturing problems with the first generation product, and so the company became cautious about the market. I think the underwhelming performance of its Microsoft Spot watches didn't help either. When the second generation Palm OS product wasn't an immediate runaway success, Fossil backed away from the market entirely. They killed some achingly great products that were in development, stuff that I think would have gone over very well. And now here's that original Palm OS watch on top of the sales chart.

You don't want to read too much into this; the Fossil watch is on closeout, and ridiculously discounted. And being on the top of a sales list at one moment in time is not the same as being a perennial best-seller.

But still…this is the holiday buying season, and that's a pretty remarkable sales ranking. It makes me wonder, was the Fossil product a dumb idea? Or was it just the wrong price point, at the wrong time?

It's very fashionable these days to dismiss the mobile data market, or to think of it as just a phone phenomenon. But we're very, very, very early in the evolution of mobile data -- equivalent to where PCs were when VisiCalc came out. We haven't even come close to exploring the price points, form factors, and software capabilities that mobile devices will develop in the next 10 years. A lot of experimental products are going to fail, but some are going to be successes. Anyone who says the market is played out, or that a particular form factor will “never” succeed, just doesn't understand the big picture.

I don't know that an electronic calendar watch is ever going to be on everyone's wrist, but wait another five years and you'll be able to make a pretty powerful wristwatch-sized data device profitably for $50. And I'll bet you somebody's going to do something very cool with it.

(PS: Just so no one thinks I'm trying to pull a fast one, I should let you know that the Amazon list changes hourly, and the sales ranking of the Fossil watch seems to jump around a lot. But the fact that it's even in the top 25 impresses me.)

Is Wikipedia wonderful or awful? I’m going to argue that it’s mostly irrelevant. But first some background…

In the last month and a half there has been a kerfuffle between Tim O’Reilly and Nicholas Carr regarding Wikipedia. It started when O’Reilly posted a very interesting essay in which he laid out his definition for Web 2.0. It’s a long and pretty ambitious document with a lot of good ideas in it. If you’re interested in Web 2.0 it’s an important read.

It’s also kind of amusing because O’Reilly managed to give shout-outs to an incredible number of startups and bloggers. I haven’t seen that much name-dropping since Joan Rivers guest-hosted the Tonight Show.

Anyway, O’Reilly cited Wikipedia as an example of a great Web 2.0 venture. Then another very smart guy, Nicholas Carr, posted an essay called The Amorality of Web 2.0. Carr is positioning himself as kind of a gadfly of the tech industry, and in this essay he called out O’Reilly and a lot of other Web boosters, criticizing the Web 2.0 campaign in general, and specifically citing Wikipedia. He quoted some very bad Wikipedia articles and used them to argue that Web 2.0 enthusiasts are ideologues more than practical business people.

Like O’Reilly’s essay, Carr’s is a very good read, and you should check it out.

Since then I’ve seen both the O’Reilly and Carr essays quoted in a lot of places, usually to either praise or damn Wikipedia, and by extension all wikis. And I think that’s a shame.

To me, Wikipedia is a fun experiment but a fairly uninteresting use of wiki technology. The world already has a number of well-written encyclopedias, and we don’t need to reinvent that particular wheel. Where I think Wikipedia shines is in areas a traditional encyclopedia wouldn’t cover. For example, it’s a great place to find definitions of technical terms.

To me, that’s the central usefulness of a wiki -- it lets people with content expertise capture and share knowledge that hasn’t ever been collected before. By their nature, these wikis are interesting only to narrow groups of enthusiasts. But if you put together enough narrow interests, you’ll eventually have something for almost everyone.

Let me give you three examples:

First, the online documentation for WordPress. As part of my experiment in blogging, I’ve been playing with several different blog tools. I was very nervous about WordPress because it’s freeware, and as a newbie I was worried about accidentally doing something wrong. But when I installed it, and worked through the inevitable snags, I discovered that WordPress has some of the best online documentation I’ve seen. It’s a stunning contrast to for-pay products like Microsoft Frontpage, which unbelievably doesn’t even come with a manual.

Why is the WordPress documentation so thorough? Well, they started with a wiki, and gradually systematized it into an online suite of documents called the WordPress Codex. I found WordPress easier to install and work with than a lot of paid-for programs, and a major reason was the wiki-derived documentation.

Second example: the Pacific Bulb Society. Several years ago a nasty fight broke out on the e-mail discussion list of the International Bulb Society, a traditional old-style group of enthusiast gardeners. It was the sort of interpersonal nastiness that sometimes happens on mail lists. A group of people got so angry that they went off by themselves and founded the Pacific Bulb Society. And they set up a wiki.

In just a few years, the enthusiasts feeding that wiki have created what’s probably the most comprehensive online collection of photos and information about bulbs anywhere. In a lot of ways, it’s better than any reference book.

Third example: the Palm OS Expert Guides, a collection of 50 written guides to Palm OS software. I helped get the Expert Guides going, so I saw this one from the inside. PalmSource didn’t have the budget or vertical market expertise to document the software available for Palm OS, but a group of volunteers agreed to do it. The Expert Guides are not technically a wiki, but the spirit is the same.

Scratch around on the Web and you’ll find volunteer-collected information and databases on all sorts of obscure topics. To me, this is the real strength of the wiki process: enthusiasts collecting information that simply hadn’t been collected before, because it wasn’t economical to do so. As wiki software and other tools improve, this information gets more complete and more accessible all the time.

I think that’s pretty exciting.

In this context, the whole Wikipedia vs. Encyclopedia Britannica debate is kind of a sideshow. That’s not where the real action is.

Nichloas Carr does raise a legitimate concern that free content on the Web is going to put paid content providers out of business. But Wikipedia didn’t kill printed encyclopedias -- web search engines did that years ago. And I don’t think free content was the cause of death; the problem was that the encyclopedias weren’t very encyclopedic compared to the avalanche of information available on the Web. And the encyclopedia vendors acted more like carriers than creators. But that’s a subject for a different essay…

I did an online search today for the words “Rokr” and “failure” together in the same article. There were 49,700 hits.

I don’t want to pick on Motorola, but the speed at which its two-month-old product was labeled a failure is fascinating -- and a great object lesson for companies that want to play in the mobile space. Here are some thoughts.

First off, it’s hard to be certain that the Rokr actually is a failure, since there are no official industry stats on phone sales by model. But the circumstantial evidence is pretty damning. Most importantly, Cingular cut the phone’s price by $100 in early November. I can tell you from personal experience that no US hardware company ever introduces a device expecting to cut its price just a couple of months after launch. It causes too many logistical problems, and pisses off your early buyers.

Also, several reporters have noticed that Motorola and Apple both gave very telling comments about the product. Steve Jobs called it “a way to put our toe in the water,” which is about as tepid an endorsement as you can get. Ed Zander famously said “screw the Nano” about the product that upstaged the Rokr’s announcement (some people claim Zander was joking, but as one of my friends used to say, at a certain level there are no jokes).

Wired has even written a full postmortem report on the product.

If we accept that the Rokr is indeed a failure, then the next question to ask is why. There are a lot of theories (for example, Wired blames the controlling mentalities of the carriers and Apple itself). But my takeaway is more basic:

Convergence generally sucks.

People have been predicting converged everything for decades, but usually most products don’t converge. Remember converged TV and hi-fi systems? Of course you don’t, neither do I. But I’ve read about them.

And of course you have an all in one stereo system in your home, right? What’s that you say? You bought separate components? But the logic of convergence says you should have merged all of them long ago.

Remember converged PCs and printers? I actually do remember this one, products like the Canon Navi. It put a phone, printer, fax, and PC all together in the same case. After all, you use them all on the same desk, they take up a lot of space, so it makes a ton of sense to converge them all together. People use exactly the same logic today for why you should converge an MP3 player and a phone. And yet the Navi lasted on the market only a little longer than the Rokr is going to.

The sad reality is that converged products fail unless there is almost zero compromise involved in them. It's so predictable that you could call it the First Law of Convergence: If you have to compromise features or price, or if one part of the converged product is more likely to fail than the others (requiring you to throw out the whole box), forget about it. The only successful converged tech products I can think of today are scanner/fax/printers. They’re cheap, don’t force much of a feature compromise, and as far as I can tell they almost never fail. But they are the exception rather than the rule.

(By the way, I don’t count cameraphones as a successful converged product because they’re driving a new more casual form of photography rather than replacing traditional cameras.)

Looked at from this perspective, the Rokr was doomed because of its compromises. Too few songs, the UI ran too slow, the price was too high. You won’t see a successful converged music phone unless and until it works just like an iPod and doesn’t carry a price premium.

The other lesson of the Rokr failure is that if you do a high-profile launch of a mediocre product, you’ll just accelerate the speed at which it tanks. If Motorola had done a low-key launch of the Rokr and had positioned it as an experiment, there might have been time to quietly tweak the pricing and figure out a better marketing pitch. But now that 49,700 websites have labeled the product a failure, rescuing it will be much, much harder.

I wrote last month that I thought Google was likely to offer to install free WiFi in more Bay Area cities. Now the company has offered to do just that in Mountain View (a city north of San Jose and site of Google’s headquarters).

You can view the Mountain View city manager’s summary of the proposal, and a letter from Google, in a PDF file here. A couple of interesting tidbits:

The city manager writes: “Deployment in Mountain View is considered a test network for Google to learn…future possible deployment to other cities and in other countries.” (My emphasis.) I wonder where Mountain View got the idea that Google wants to deploy WiFi outside the Bay Area.

Google writes: “We believe that free (or very cheap) Internet access is a key to bridging the digital divide and providing access to underprivileged and less served communities.” Okay, I believe that too -- but if you know Mountain View you’ll know that the main digital divide there is between people who have DSL and people who have cable modems. If you want to bridge a real digital divide, offer WiFi for someplace like Oakland or East Palo Alto. But then you’d probably need to offer those people computers as well.

Google wrote: “In our self-interest, we believe that giving more people the ability to access the Internet will drive more traffic to Google and hence more revenue to Google and its partner websites.” The San Jose Mercury-News called that “unusual candor,” but I’d call it an understatement. If Google had said, “we’re planning to create a bunch of new for-fee services that we’ll promote like maniacs through the landing page,” that would have been unusual candor. As Mountain View’s summary states, “the agreement does allow Google in the future to charge a fee for enhanced services.”

I think it would be healthy for Google to come clean about this stuff. There’s nothing cities and users have to fear from new Google’s new services, as long as they Google doesn’t create a closed garden, and Google promises to keep the network open. (Although you would need a Google ID to log in, and the service would take you first to a Google landing page.)

My questions: When will Google make that offer to the city where I live, San Jose? And why in the world is the San Jose City Council planning to spend $100,000 down and $60,000 a year of taxpayer money to build a WiFi network serving a small chunk of downtown when Google’s offering to serve whole cities for free?

Or, why Web 2.0 doesn't cut it for mobile devices

One of the hottest conversations among the Silicon Valley insider crowd is Web 2.0. A number of big companies are pushing Web 2.0-related tools, and there’s a big crop of Web 2.0 startups. You can also find a lot of talk of “Bubble 2.0” among the more cautious observers.

It’s hard to get a clear definition of what Web 2.0 actually is. Much of the discussion has centered on the social aspirations of some of the people promoting it, a topic that I’ll come back to in a future post. But when you look at Web 2.0 architecturally, in terms of what’s different about the technology, a lot of it boils down to a simple idea: thicker clients.

A traditional web service is a very thin client -- the Browser displays images relayed by the server, and every significant user action goes back to the server for processing. The result, even on a high-speed connection, is online applications that suck bigtime when you start to do any significant level of user interaction. Most of us have probably had the experience of using a Java-enabled website to do some content-editing or other task. The experience often reminds me of using GEM in 1987, only GEM was a lot more responsive.

The experience isn’t just unpleasant -- it’s so bad that non-geeks are unlikely to tolerate it for long. It’s a big barrier to use of more sophisticated Web applications.

Enter Web 2.0, whose basic technical idea is to put a user interaction software layer on the client, so the user gets quick response to basic clicks and data entry. The storage and retrieval of data is conducted asynchronously in the background, so the user doesn’t have to wait for the network.

In other words, a thicker client. That makes sense to me -- for a PC.

Where Web 2.0 doesn’t make sense is for mobile devices, because the network doesn’t work the same way. For a PC, connectivity is an assumed thing. It may be slow sometimes (which is why you need Web 2.0), but it’s always there.

Mobile devices can’t assume that a connection will always be available. People go in and out of coverage unpredictably, and the amount of bandwidth available can surge for a moment and then dry up (try using a public WiFi hotspot in San Francisco if you want to get a feel for that). The same sort of thing can happen on cellular networks (the data throughput quoted for 2.5G and 3G networks almost always depends on on standing under a cell tower, and not having anyone else using data on that cell).

The more people start to depend on their web applications, the more unacceptable these outages will be. That’s why I think mobile web applications need a different architecture -- they need both a local client and a local cache of the client data, so the app can be fully functional even when the user is out of coverage. Call it Web 3.0.

That’s the way RIM works -- it keeps a local copy of your e-mail inbox, so you can work on it at any time. When you send a message, it looks to you as if you’ve sent it to the network, but actually it just goes to an internal cache in the device, where the message sits until a network connection is available. Same thing with incoming e-mail -- it sits in a cache on a server somewhere until your device is ready to receive.*

The system looks instantaneous to the user, but actually that’s just the local cache giving the illusion of always-on connectivity.

This is the way all mobile apps should work. For example, a mobile browser should keep a constant cache of all your favorite web pages (for starters, how about all the ones you’ve bookmarked?) so you can look at them anytime. We couldn’t have done this sort of trick on a mobile device five years ago, but with the advent of micro hard drives and higher-speed USB connectors, there’s no excuse for not doing it.

Of course, once we’ve put the application logic on the device, and created a local cache of the data, what we’ve really done is create a completely new operating system for the device. Thsat's another subject I'll come back to in a future post.

_______________

*This is an aside, but I tried to figure out one time exactly where an incoming message gets parked when it’s waiting to be delivered to your RIM device. Is it on a central RIM server in Canada somewhere, or does it get passed to a carrier server where it waits for delivery? I never was able to figure it out; please post a reply if you have the answer. The reason I wondered was because I wanted to compare the RIM architecture to what Microsoft’s doing with mobile Exchange. In Microsoft’s case, the message sits on your company’s Exchange server. If the server knows your device is online and knows the address for it, it forwards the message right away. Otherwise, it waits for the device to check in and announce where it is. So Microsoft’s system is a mix of push and pull. I don’t know if that’s a significant competitive difference from the way RIM works.

The November 7 issue of BusinessWeek features this full-page ad for the LG VX9800 smart phone, which is currently available through Verizon.

The screen shows what looks like a video feed of a football game, and the “remote not included” headline implies that it’s a video product. But the presence of a keyboard implies e-mail, and look at the background of the photograph -- the phone is sitting on what looks like a polished granite table, and out the window you can see tall buildings, viewed from a height. It looks like we’re up in a corporate executive’s office.

So who’s this phone really for?

The text of the ad doesn’t help: “Now you can watch, listen, and enjoy all your favorite multimedia contents and exchange e-mails without missing a single call. With its sleek design, clarity of a mega-pixel camera, sounds of an audio player, easy-to-use QWERTY keypad, it’s the new mobile phone from LG!”

It’s just a feature list -- and, by the way, a feature list that reads like it was badly translated from Korean.

There’s no sense of who the product’s for, or what problems it’s supposed to solve in that customer’s life. Basically, this is a phone for geeks like me who enjoy playing with technology. And we all know what a big market that is -- we’re the people who made the Sony Clie a raging commercial success.

An added difficulty is that the features LG talks about don’t necessarily work the way you’d expect. The video service that comes with the phone shows only a small number of short clips, to get Outlook e-mail you have to run a redirector on your desktop computer, and the phone doesn’t even have a web browser. You can learn more in this PC Magazine review.

But I’m not all that concerned about customers being disappointed, because with this sort of feature-centric advertising, very few people are going to buy the phone anyway.

The ironic thing is that the people at LG are smarter than this. I’ve met with their smartphone folks. They’re bright, they learn fast, and LG definitely knows how to make cool hardware. But somewhere along the way they’re just not connecting with actual user needs.

Just like most of the other companies making smartphones today.

Epilog: Tonight (11/3) I saw that LG has created a television ad for the phone. We've now confirmed that the target user is a young guy with an untucked shirt and cool-looking girlfriend. Sounds like the geek aspirational market to me. Oh, and most of the commercial is...a feature list.